OpenAI announced on Tuesday the release of two new open-weight AI reasoning models, matching many capabilities of its proprietary o-series. Available for free download on the developer platform Hugging Face, the company described these models as “state-of-the-art” based on multiple benchmarks for open AI models.

The two models come in different sizes: the larger gpt-oss-120b, which can run on a single Nvidia GPU, and the lighter gpt-oss-20b, designed to operate on consumer laptops with 16GB of memory. This marks OpenAI’s first open language model release since GPT-2, over five years ago.

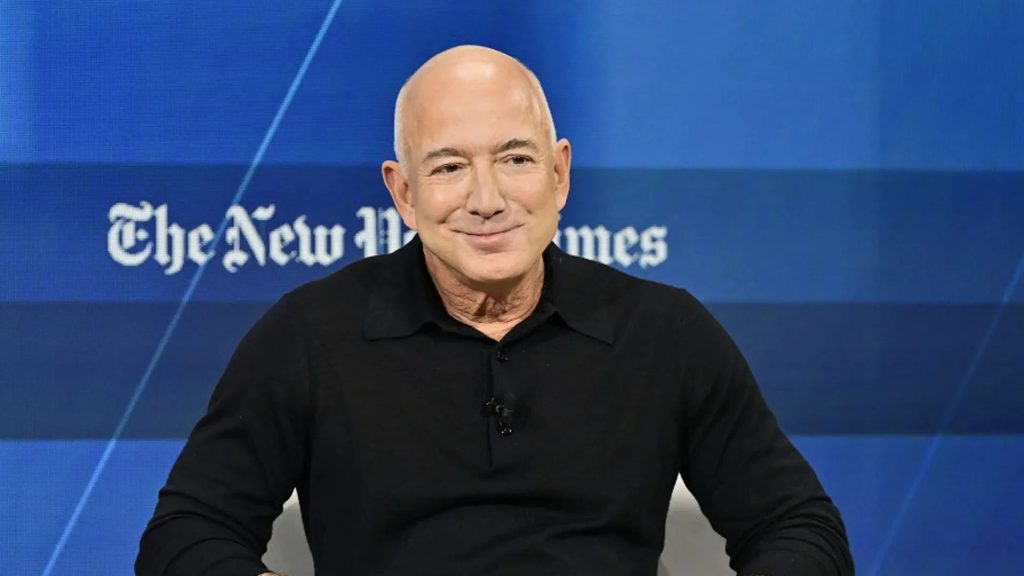

OpenAI explained that these open models can connect with more powerful closed models in the cloud to handle tasks like image processing, expanding their functionality beyond text. CEO Sam Altman acknowledged a shift in strategy, saying the company had been “on the wrong side of history” by not open sourcing more technology earlier.

“This is a significant step toward ensuring AGI benefits all of humanity,” Altman stated. “We are excited for the world to build on an open AI stack created in the United States, based on democratic values, available for free to all and for wide benefit.”

Benchmark testing showed strong performance: on the competitive coding site Codeforces, gpt-oss-120b and gpt-oss-20b scored 2622 and 2516 respectively, outperforming DeepSeek’s R1 but trailing behind OpenAI’s own o3 and o4-mini models. On Humanity’s Last Exam, a complex question set, the models scored 19% and 17.3%, beating rival open models from DeepSeek and Qwen but not the latest o-series.

However, OpenAI noted that hallucination rates—instances where models generate incorrect information—were notably higher in the open models. On its internal PersonQA benchmark, gpt-oss-120b and gpt-oss-20b hallucinated in 49% and 53% of responses, compared to 16% for the o1 model and 36% for o4-mini. OpenAI attributes this to the smaller size and reduced world knowledge of open models.

The new models were trained using similar methods to OpenAI’s proprietary models, employing mixture-of-experts (MoE) techniques to efficiently activate fewer parameters per token. The gpt-oss-120b model, with 117 billion total parameters, activates just 5.1 billion per token, enhancing speed and efficiency. Reinforcement learning (RL) with large Nvidia GPU clusters helped refine model responses, enabling complex chain-of-thought reasoning and tool usage like web searches and Python code execution. However, these open models are text-only and cannot process images or audio.

Released under the permissive Apache 2.0 license, the models allow enterprises to commercialize them without paying OpenAI or seeking permission. Yet, unlike fully open-source AI labs, OpenAI is withholding the training data, a cautious move amid lawsuits alleging unauthorized use of copyrighted material.

The launch was delayed multiple times to address safety concerns. OpenAI assessed whether the models could be fine-tuned for malicious uses, such as cyberattacks or developing biological or chemical weapons, and found only marginal risk without reaching high-danger thresholds.

While OpenAI’s open models set a new standard among open AI, the developer community eagerly anticipates upcoming releases from competitors like DeepSeek’s R2 and Meta’s new open superintelligence model.